Google Play’s New “Experiments” Tool – you need to take advantage of this

Everyone knows that good app pages are crucial for an app’s success. The problem is, no one really knows what constitutes a good app page. App marketers can speak with great confidence about best practices, but no one knows what actually works better on an app page. That is, until now.

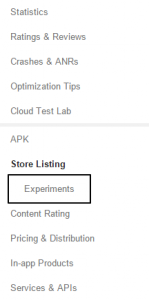

If you’re an Android developer, you might have noticed a new ‘Experiments’ section in the left sidebar of your Google Play Developer Console. If you haven’t noticed it yet, go to your Google Play Developer Console, open ‘Store Listings’ and be enlightened.

What is ‘Experiments’?

‘Experiments’ is the new native Google Play A/B testing platform for app pages. Unlike commercial A/B testing tools such as Stormaven that use a page that looks like an app page, here you use your actual app page.

What can I test?

Just about any feature of your app page, except for the title. You can run a global test which lets you test graphics only. Alternately, you can run the test in only one locale, which will enable testing of descriptions and short descriptions as well.

How does it work?

Each time, you can test four different variants of you app page. That means your current app page plus 3 additional ones. You need to define the percentage of audience that will be sent to the tested app pages. So, if you set that 30% of users would be sent to the tested pages, and create 3 different variants in the experiment, 10% of users of users should see each tested variant, and 70% of users will reach the current version of your app pages. Users are blind to the fact that they’re not viewing the standard app page, users in all groups will encounter the exact same experience, with the sole difference of the tested app page feature.

What do I get for results?

Google Play will track and standardize the number of the installs and uninstalls in each variant. Obviously, the variant that will induce the most installs and least uninstalls prevails.

The Fine Print

The Experiments tool does have several downsides and restrictions. Some are due to statistical reasons and some are due to… well, because Google said so.

- You can only run one test at a time. It is impossible to run two tests in parallel, even if two separate localities are being tested.

- You cannot send more than 50% of traffic to the tested app pages. This means that if you test 4 variants (current + 3 more), you can only send 16.66% of traffic to each tested variant.

- Significance level is defined as 90% at a two-tailed test.

- Numbers vary largely depending on the variance, base line conversion rate and the difference between the tested versions, but you will likely need around 2,000 observations to reach statistical significance. Notice that observations are app page views, not installs.

If this isn’t enough, here’s some more information direct from the source.

So, what are you waiting for? Put your lab coat on and start experimenting!

BACK TO BLOG

NEXT ARTICLE

BACK TO BLOG

NEXT ARTICLE